![]() 10 min read

10 min read

·

Apr 21, 2024

Several topics like this are discussed on my YouTube channel. Please visit. I appreciate your support.

Introduction:

In today’s digital age, where the demand for online content is skyrocketing, ensuring fast and reliable delivery of web pages, images, videos, and other digital assets to users worldwide is essential.

We all rely on the internet for everything from watching videos to getting work done. But what happens when websites load slowly? It’s frustrating!

This is where Content Delivery Networks (CDNs) come into play. Let’s explore what CDNs are, why they are needed, and how they work, along with real-world examples and optimization strategies.

What is CDN?

A Content Delivery Network (CDN) is a geographically distributed network of servers and data centers designed to deliver web content to users more efficiently and reliably. It works by caching content at edge servers located closer to end-users, reducing latency and improving website performance.

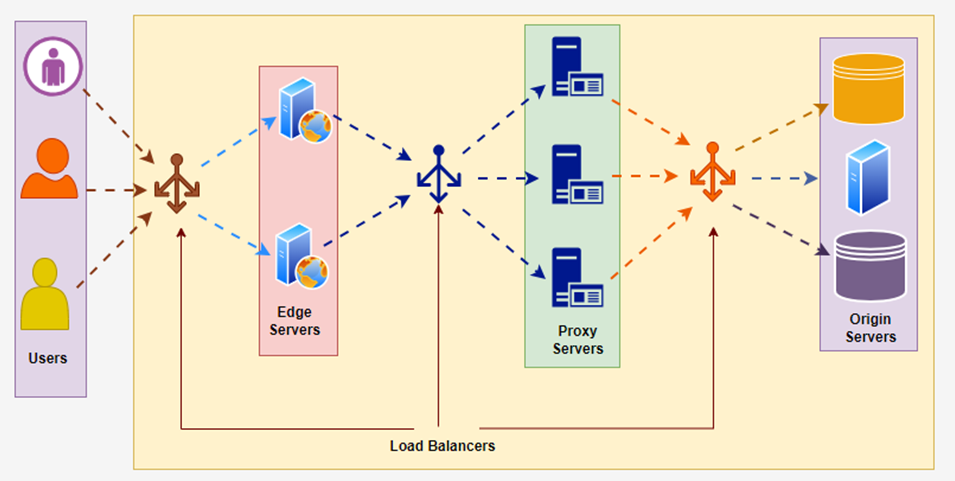

CDN Layers

Why is CDN Needed?

CDNs are needed to address the following challenges:

1. Latency Reduction: By serving content from servers closer to users, CDNs minimize the time it takes for data to travel, resulting in faster load times and improved user experience.

2. Scalability: CDNs can handle high traffic loads and sudden spikes in demand by distributing content across multiple servers, preventing website crashes and downtime.

3. Reliability: CDNs improve the reliability of content delivery by reducing the risk of server failures and network congestion. They also provide redundancy and failover mechanisms to ensure continuous availability.

Let’s understand the below few terminologies frequently used while discussing CDN.

· PoP (Point of Presence): A network location where CDN infrastructure is placed to cache and deliver content, enhancing performance for end-users.

· Edge Server: A distributed server at the edge of a CDN network, caching and serving content to users to reduce latency and optimize delivery.

· Proxy Server: An intermediary server in a CDN that caches and delivers content to users, reducing load on origin servers and improving performance.

Components of a CDN

A CDN comprises several components that work together to deliver content efficiently:

1. Client: The end-user device (e.g., laptop, smartphone) that requests content from the CDN.

2. Routing System: Determines the optimal path for content delivery based on factors like network proximity and server availability.

3. Scrubber Servers: Filter out malicious traffic and protect against cyber threats like DDoS attacks.

4. Proxy Servers: Cache and serve content to users, reducing the load on origin servers and improving performance.

5. Distribution System: Manages the distribution of content to edge servers and ensures consistent delivery across the network.

6. Origin Servers: Store the original copy of content and provide it to the CDN for caching and distribution.

7. Load Balancers: These components distribute incoming traffic across multiple servers to optimize performance and ensure high availability.

8. Content Optimization Tools: These tools preprocess and optimize content before caching and delivery, enhancing performance and reducing bandwidth usage.

9. Logging and Monitoring Tools: These tools track CDN performance metrics, monitor server health, and provide insights into traffic patterns and usage trends.

10. Management System: Controls and monitors the operation of the CDN, including configuration, performance monitoring, and analytics.

Working of CDN: Example Workflow

Consider a scenario where a user in India wants to access a popular video streaming website hosted on servers in the United States. Without a CDN, the user’s request would need to travel across long distances, resulting in slow load times and buffering issues. However, with a CDN in place, the workflow is optimized to deliver content efficiently and reliably, leveraging various components of the CDN architecture:

1. Client: The end-user device in India sends a request to access the video streaming website.

2. Routing System: The request is routed to the nearest Point of Presence (PoP) or edge server in India, using load balancers to distribute traffic and optimize content delivery.

3. Proxy Servers: The proxy server in the Indian PoP checks if the requested video content is available in its cache.

4. Content Optimization Tools: If the content is available, it is served directly to the user, enhancing performance and reducing bandwidth usage. Otherwise, the request proceeds to the next step.

5. Origin Servers: The proxy server fetches the requested video content from the origin server in the United States.

6. Load Balancers: Load balancers are utilized to distribute incoming requests among multiple origin servers, optimizing resource utilization and ensuring scalability.

7. Distribution System: The fetched content is then distributed to the proxy server in India, as well as other edge servers or PoPs worldwide.

8. Content Caching Strategies: The content is cached at the proxy server in India for future requests, employing caching strategies like push or pull caching to optimize performance and reduce latency.

9. Health Checking: The CDN continuously monitors the health and reliability of proxy servers and origin servers, ensuring efficient content delivery and fault tolerance.

10. Management System: The CDN management system controls and monitors the operation of the CDN, including configuration, performance monitoring, and analytics, to ensure optimal CDN performance and user experience.

Content Caching Strategies

CDNs employ various content caching strategies to optimize performance:

1. Push Caching: Origin servers proactively push content to edge servers before it is requested, ensuring faster delivery to users.

2. Pull Caching: Edge servers fetch content from origin servers in real-time when requested by users, reducing storage requirements and ensuring freshness of content.

Finding the Nearest Proxy Server

Finding the nearest proxy server to fetch data involves determining the server closest to the requesting client to minimize latency and optimize performance. Two critical factors influence the proximity of the proxy server:

1. Network Distance: The distance between the user and the proxy server plays a crucial role. It depends on two main factors:

· Length of the Network Path: The physical distance between the user and the proxy server determines the network path’s length. Shorter paths typically result in lower latency.

· Capacity (Bandwidth) Limits: The capacity or bandwidth along the network path also impacts proximity. Optimal proximity involves selecting the server with the shortest path and the highest available bandwidth. This ensures faster content delivery to the user.

2. Requests Load: The load on a proxy server at any given time, known as request load, is another vital consideration. If a group of proxy servers is experiencing high loads, the request routing system should redirect requests to servers with lower loads. This action helps balance the load across proxy servers and reduces response latency for users.

CDNs use various techniques to route user requests to the nearest proxy server.

1. DNS Redirection: DNS-based redirection involves mapping domain names to IP addresses of proxy servers located nearest to the client. When a client sends a DNS query to resolve a domain name, the DNS server responds with the IP address of the nearest proxy server, directing the client to that server for content retrieval.

2. Anycast: Anycast routing is a network addressing and routing technique that directs data packets to the nearest or best-performing node among a group of servers that share the same IP address. With Anycast, multiple proxy servers advertise the same IP address, and routers automatically route traffic to the nearest server based on network topology, minimizing latency and improving reliability.

3. Client Multiplexing: Client multiplexing involves maintaining multiple concurrent connections between the client and different proxy servers. This allows the client to connect to multiple servers simultaneously and choose the one with the lowest latency for content retrieval.

4. HTTP Redirection: HTTP-based redirection involves the proxy server redirecting the client to a closer server using HTTP status codes such as 301 (Moved permanently) or 302 (Found). When the client sends a request to the proxy server, the server evaluates the client’s location and redirects the request to the nearest server for data retrieval.

CDN Proxy Server Placement

CDN providers strategically place proxy servers in data centers worldwide, considering factors like population density, network infrastructure, and traffic patterns. This ensures optimal coverage and performance for users in different regions.

Positioning CDN proxy servers strategically is crucial for efficient content delivery. Consider the following placement options:

1. On-Premises: This involves setting up CDN proxy servers in smaller data centers located near major Internet Exchange Points (IXPs). These data centers benefit from direct peering with numerous networks, ensuring optimal connectivity and faster content delivery.

2. Off-Premises: Placing CDN proxy servers within Internet Service Providers’ (ISPs) networks is another effective strategy. By leveraging ISP infrastructure, CDN providers can distribute proxy servers across diverse geographic locations, reducing latency and improving content delivery speed for end-users.

Akamai and Netflix popularized the idea of keeping their CDN proxy servers inside the client’s ISPs. What benefits could an ISP get by placing the CDN proxy servers inside their network? Let me know your thoughts in the comments section.

CDN as a service

Most companies don’t build their own CDN. Instead, they use the services of a CDN provider, such as Akamai, Cloudflare, Fastly, and so on, to deliver their content. Similarly, players like AWS make it possible for anyone to use a global CDN facility.

Multi-Tier CDN Architecture

In a Content Delivery Network (CDN), distributing content to a large number of clients simultaneously while minimizing the burden on the origin server is a critical challenge. To address this challenge, CDNs implement a tree-like structure to facilitate efficient data distribution from the origin server to the CDN proxy servers.

In this structure, the CDN proxy servers are organized into a hierarchy resembling a tree, with edge proxy servers at the bottom and parent proxy servers above them. The edge proxy servers, which are closest to the end-users, have peer servers within the same hierarchy. These peer servers receive data from the parent nodes in the tree, which, in turn, receive data from the origin servers.

The data distribution process involves copying content from the origin server to the proxy servers by traversing different paths within the tree structure. Each level of the hierarchy plays a role in propagating content downstream to the edge servers, ensuring efficient delivery to end-users.

The tree structure offers several benefits:

1. Scalability: By adding more server nodes to the tree as needed, the CDN can scale its infrastructure to accommodate increasing numbers of users. New servers can be added at various levels of the hierarchy to handle the growing demand for content delivery.

2. Load Distribution: Distributing content across multiple levels of the tree reduces the burden on the origin server for data distribution. Content is cached and served from edge servers closer to the end users, minimizing latency and improving performance.

3. Redundancy and Fault Tolerance: The hierarchical structure provides redundancy and fault tolerance. If a proxy server or a node in the tree fails, other nodes can still serve content to users, ensuring uninterrupted service.

A typical CDN may consist of one or two tiers of proxy servers, each serving a specific function in the data distribution process. By leveraging a tree-like structure, CDNs can efficiently deliver content to users worldwide while optimizing performance and minimizing the load on origin servers.

If a child or parent proxy server fails within a Point of Presence (PoP) in a Content Delivery Network (CDN), the system is designed to handle such situations efficiently:

1. Child Proxy Server Failure: In case a child proxy server experiences a failure, DNS (Domain Name System) can redirect client requests to another functioning child-level proxy server within the same PoP. Each child proxy server is aware of multiple upper-layer parent servers. Therefore, if one parent server fails, the child proxy server can route requests to the alternative parent server, ensuring continuous service availability.

2. Parent Proxy Server Failure: Similarly, if a parent proxy server fails, child proxy servers can switch to alternate parent servers to maintain service continuity. This ensures that even if one level of proxy servers encounters an issue, the system can seamlessly route traffic through backup servers.

3. Origin Server Failure: The origin server, which comprises a set of servers with redundant backups, stores the original content. In the event of an origin server failure, other servers within the CDN infrastructure take on the additional load. The content is typically replicated across multiple origin servers, ensuring redundancy and fault tolerance. This redundancy ensures that content remains accessible even if one origin server fails, minimizing downtime and ensuring uninterrupted content delivery to end-users.

Ensuring Data Consistency

CDNs employ mechanisms like cache invalidation and time-to-live (TTL) settings to ensure data consistency between origin servers and edge caches. Cache invalidation removes outdated content from caches, while TTL settings control how long content remains cached before it expires and needs to be refreshed from the origin server.

Conclusion

In conclusion, Content Delivery Networks (CDNs) play a vital role in optimizing the delivery of web content to users worldwide. By leveraging a distributed network of edge servers, CDNs reduce latency, improve reliability, and enhance the overall user experience. With efficient content caching strategies, intelligent routing algorithms, and strategic proxy server placement, CDNs ensure fast and consistent delivery of digital assets, making the internet faster and more accessible for everyone.